import pandas as pd

import geopandas as gpd

url='https://bit.ly/3I0XDrq'

df = pd.read_csv(url)

df.set_index('id', inplace=True)

df['price'] = df.price.str.replace('$','',regex=False).astype('float')

gdf = gpd.GeoDataFrame(df,

geometry=gpd.points_from_xy(

df['longitude'],

df['latitude'],

crs='epsg:4326'

)

)Exploratory

Data Analysis

Jon Reades - j.reades@ucl.ac.uk

1st October 2025

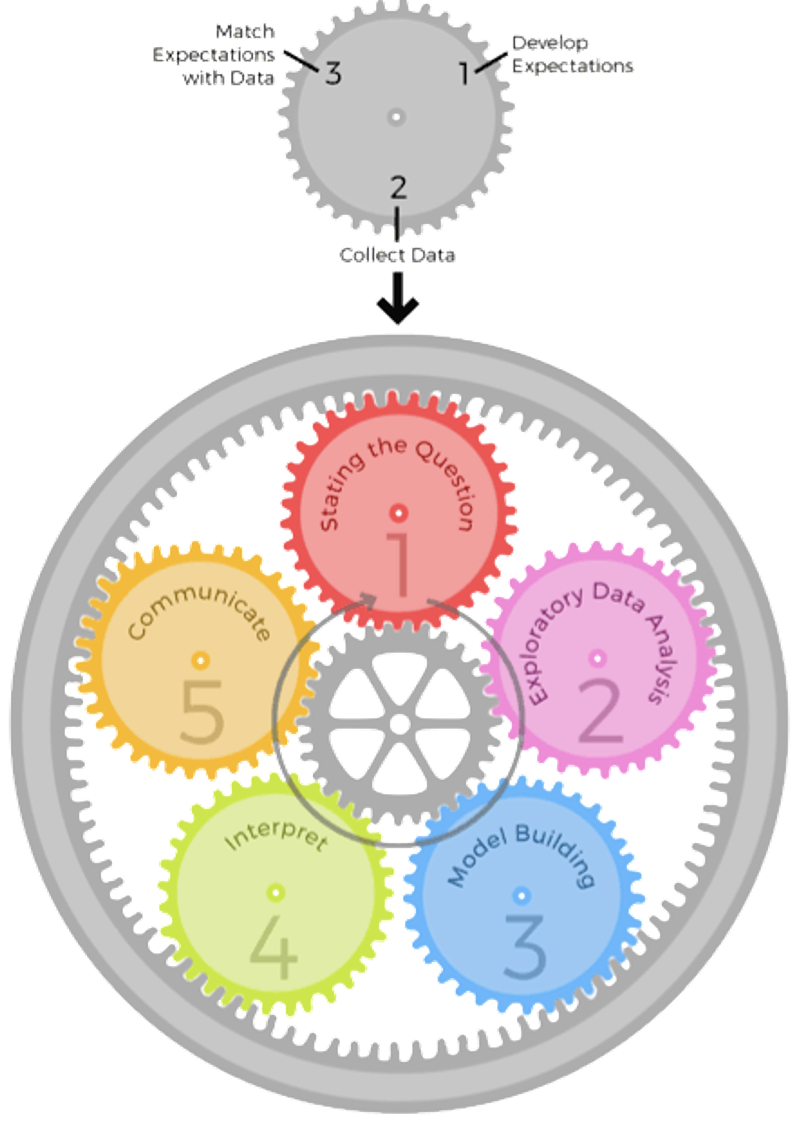

Epicyclic Feedback

Peng and Matsui, The Art of Data Science, p.8

| Set Expectations | Collect Information | Revise Expectations | |

|---|---|---|---|

| Question | Question is of interest to audience | Literature search/experts | Sharpen question |

| EDA | Data are appropriate for question | Make exploratory plots | Refine question or collect more data |

| Modelling | Primary model answers question | Fit secondary models / analysis | Revise model to include more predictors |

| Interpretation | Interpretation provides specific and meaningful answer | Interpret analyses with focus on effect and uncertainty | Revise EDA and/or models to provide more specific answers |

| Communication | Process & results are complete and meaningful | Seek feedback | Revises anlyses or approach to presentation |

Approaching EDA

There’s no hard and fast way of doing EDA, but as a general rule you’re looking to:

- Clean

- Canonicalise

- Clean More

- Visualise & Describe

- Review

- Clean Some More

- …

The ‘joke’ is that 80% of Data Science is data cleaning.

A Related Take

- Descriptive Statistics: get a high-level understanding of your dataset.

- Missing values: come to terms with how bad your dataset is.

- Distributions and Outliers: and why countries that insist on using different units make our jobs so much harder.

- Correlations: and why sometimes even the most obvious patterns still require some investigating.

Another Take

Here’s another view of how to do EDA:

- Preview data randomly and substantially

- Check totals such as number of entries and column types

- Check nulls such as at row and column levels

- Check duplicates: do IDs recurr, did the servers fail

- Plot distribution of numeric data (univariate and pairwise joint distribution)

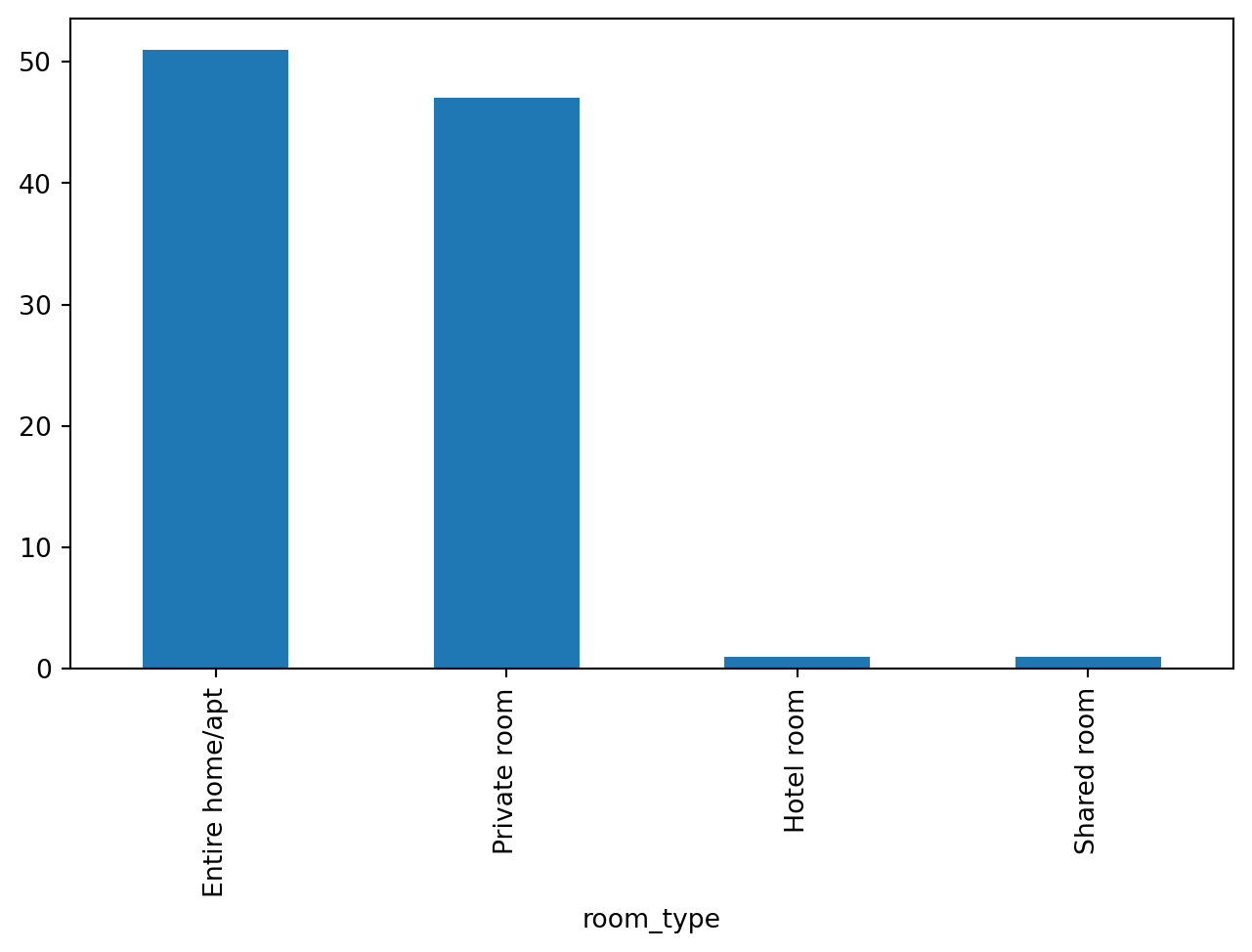

- Plot count distribution of categorical data

- Analyse time series of numeric data by daily, monthly and yearly frequencies

In Practice

import pandas as pd

import geopandas as gpd

url='https://bit.ly/3I0XDrq'

df = pd.read_csv(url)

df.set_index('id', inplace=True)

df['price'] = df.price.str.replace('$','',regex=False).astype('float')

gdf = gpd.GeoDataFrame(df,

geometry=gpd.points_from_xy(

df['longitude'],

df['latitude'],

crs='epsg:4326'

)

)Getting Started

You can follow along by loading the Inside Airbnb sample:

What Can We Do?1 (Series)

print(f"Host count is {gdf.host_name.count()}")

print(f"Mean is {gdf.price.mean():.0f}")

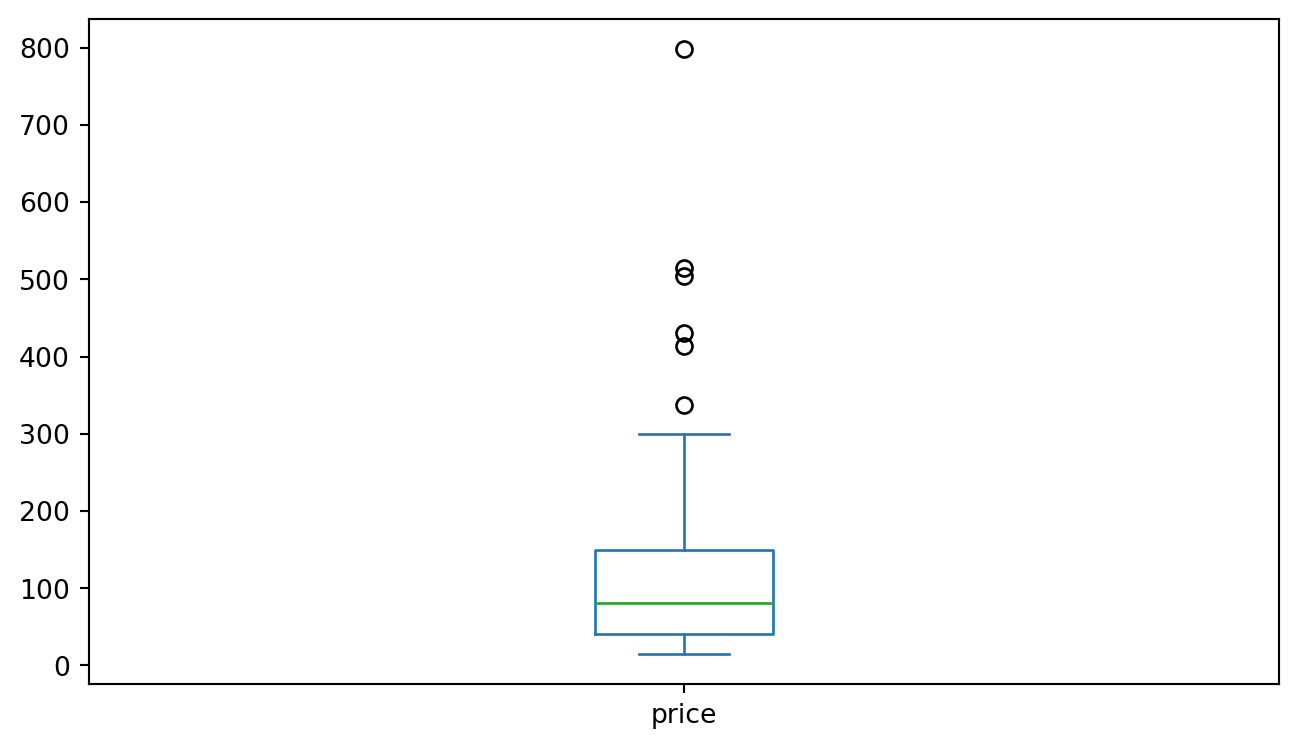

print(f"Max price is {gdf.price.max()}")

print(f"Min price is {gdf.price.min()}")

print(f"Median price is {gdf.price.median()}")

print(f"Standard dev is {gdf.price.std():.2f}")

print(f"25th quantile is {gdf.price.quantile(q=0.25)}")Host count is 100

Mean is 118

Max price is 798.0

Min price is 15.0

Median price is 80.5

Standard dev is 123.87

25th quantile is 40.75What Can We Do?1 (Data Frame Edition)

| Command | Returns |

|---|---|

|

Measures

So pandas provides functions for commonly-used measures:

More Complex Measures

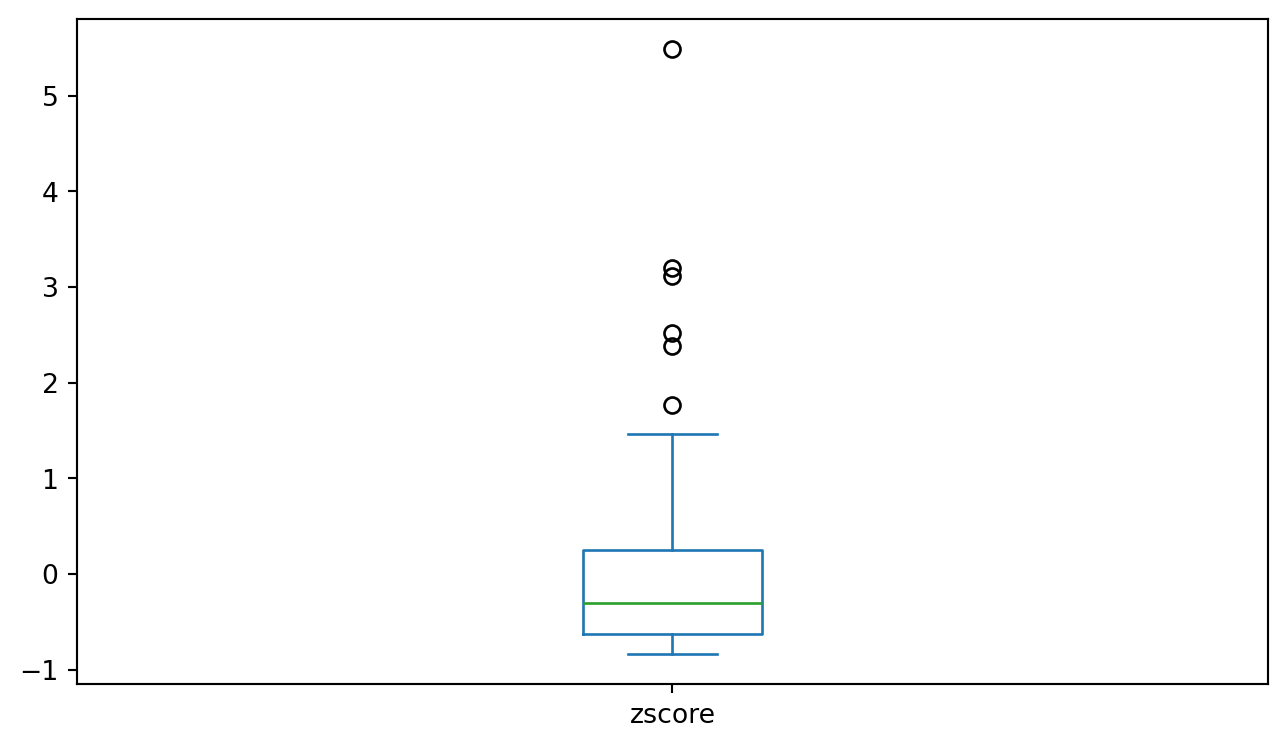

Pandas also makes it easy to derive new variables… Here’s the z-score:

And Even More Complex

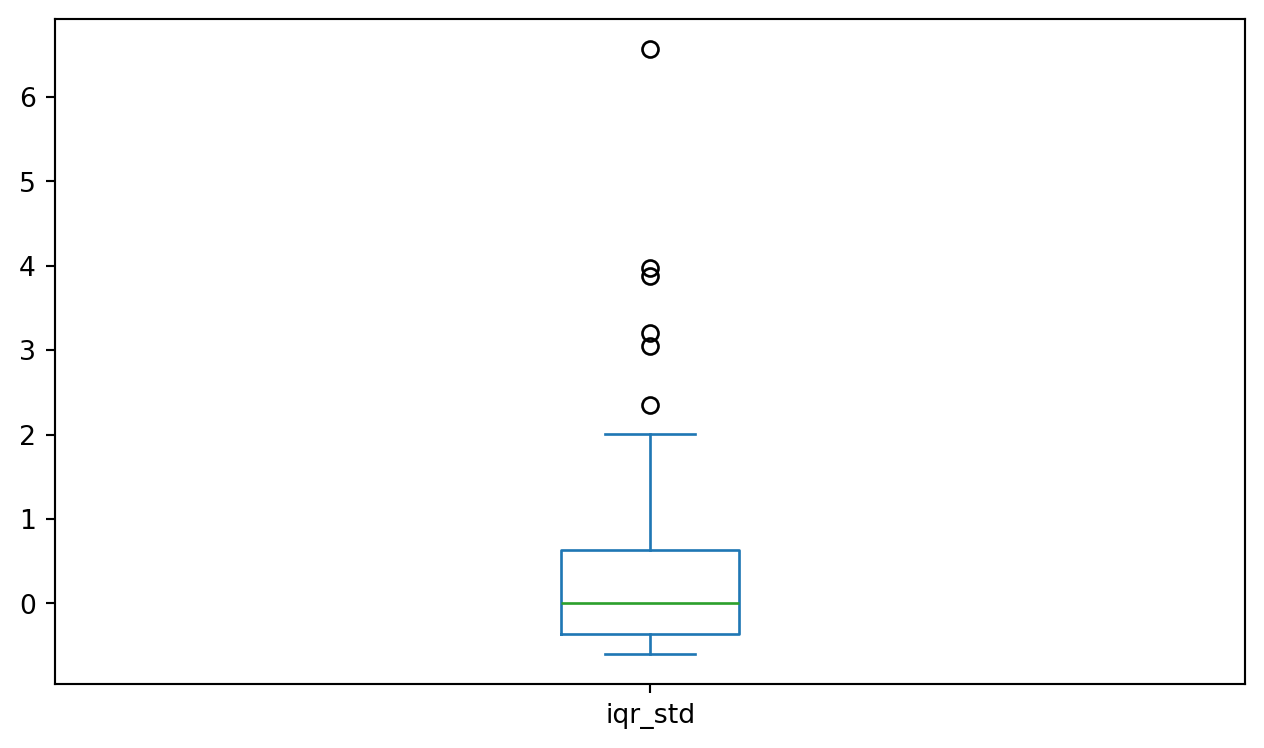

And here’s the Interquartile Range Standardised score:

The Plot Thickens

We’ll get to more complex plotting over the course of the term, but here’s a good start for exploring the data! All plotting depends on matplotlib which is the ogre in the attic to R’s ggplot.

Get used to this import as it will allow you to save and manipulate the figures created in Python. It is not the most intuitive approach (unless you’ve used MATLAB before) but it does work.

Confession Time

I do like

ggplotand sometimes even finish off graphics for articles in R just so that I can useggplot; however, it is possible to generate great-looking figures inmatplotlibbut it is often more work because it’s a lot less intuitive.

Boxplot

Frequency

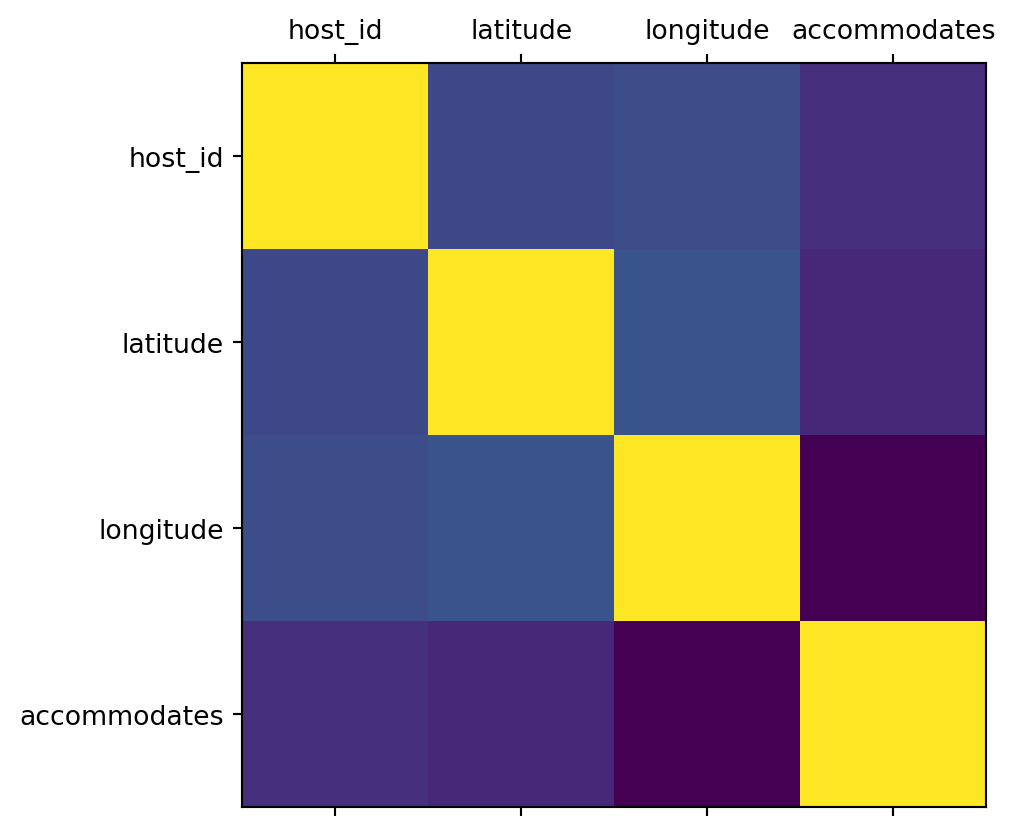

A Correlation Heatmap

We’ll get to these in more detail in a couple of weeks, but here’s some output…

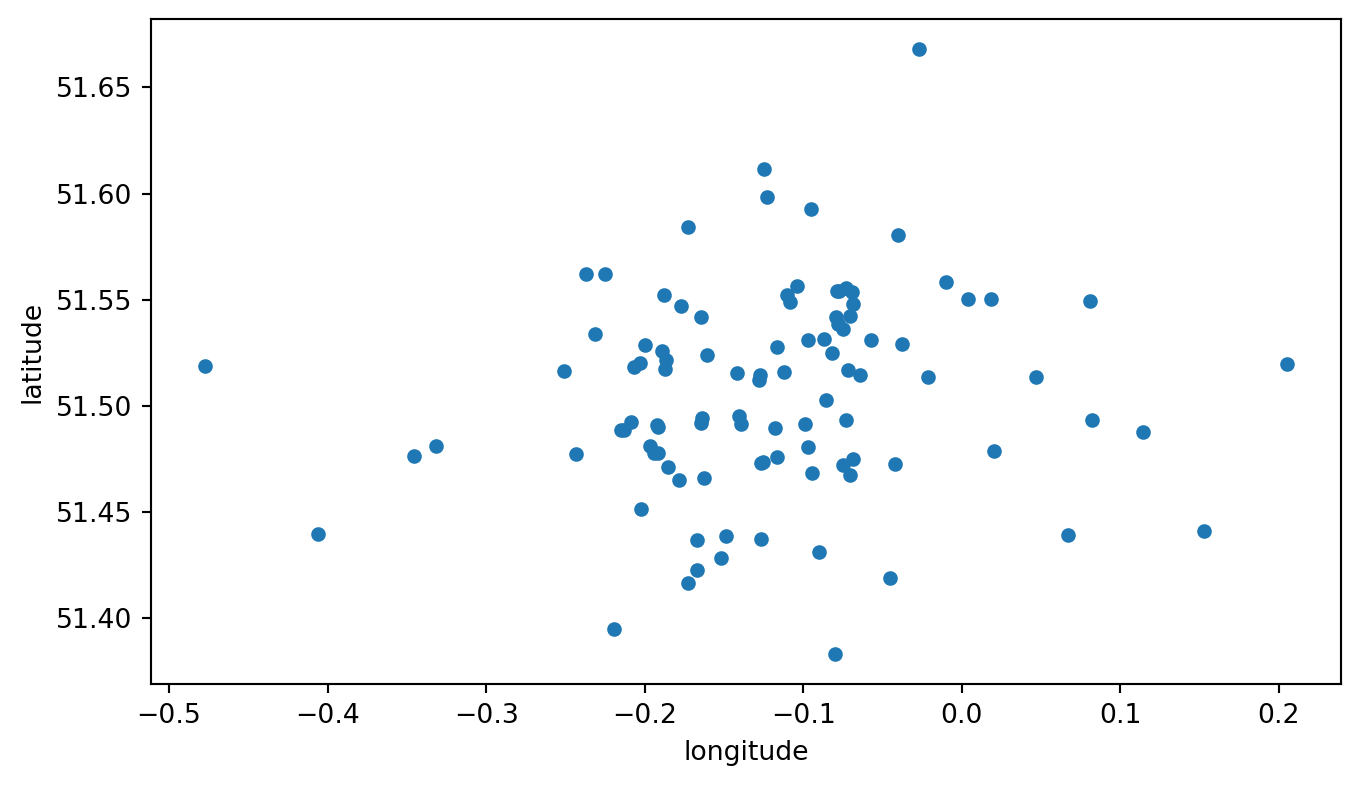

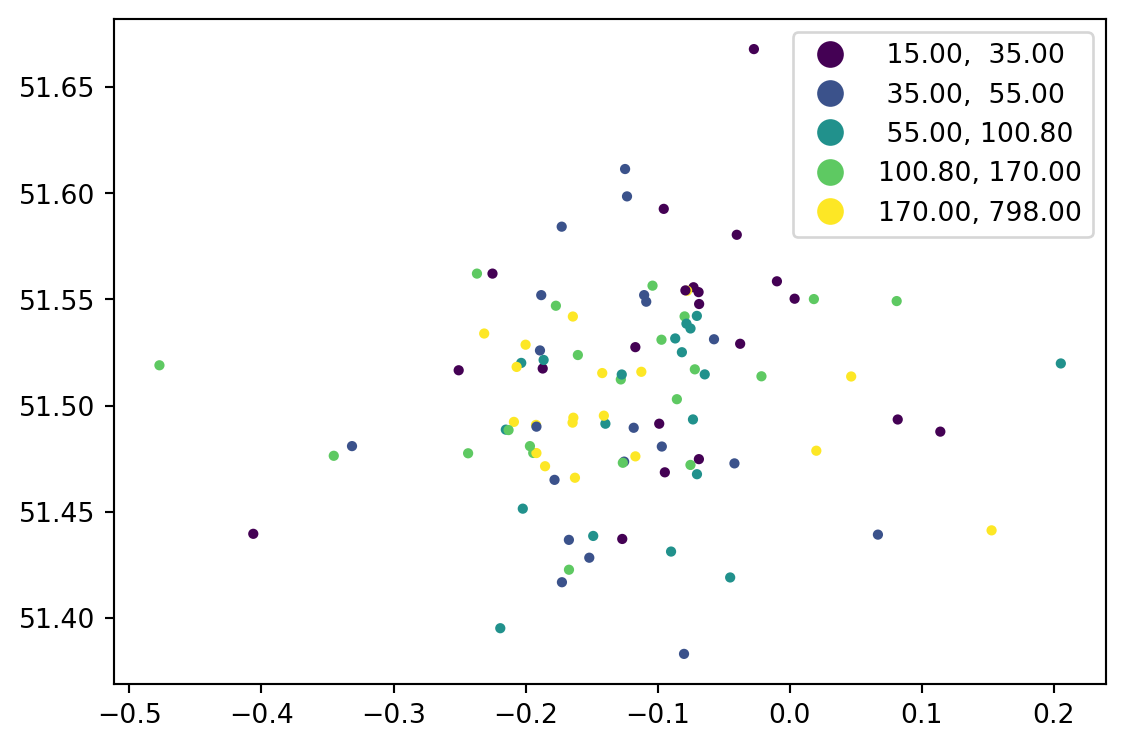

A ‘Map’

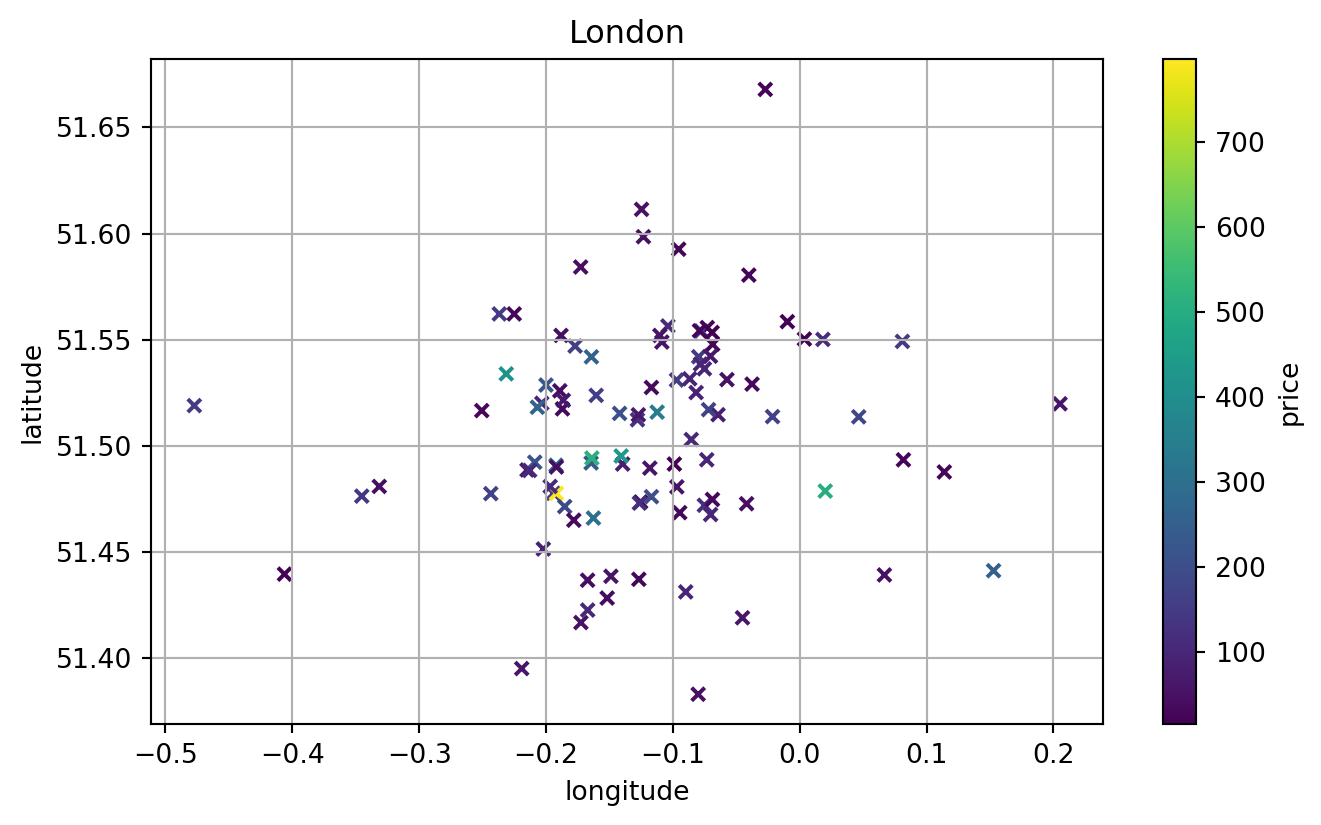

A Fancy ‘Map’

An Actual ‘Map’

Additional Resources

There’s so much more to find, but:

- Pandas Reference

- A Guide to EDA in Python (Looks very promising)

- EDA with Pandas on Kaggle

- EDA Visualisation using Pandas

- Python EDA Analysis Tutorial

- Better EDA with Pandas Profiling [Requires module installation]

- EDA: DataPrep.eda vs Pandas-Profiling [Requires module installation]